Retrieve Azure DevOps Pipeline current/older metadata

Use Azure DevOps REST API to automate multiple inter-dependant pipelines and run them in harmony

1. The Problem

I believe to better understand any blog, understanding its original requirement is essential.

We use serval microservices in our application which is finally deployment using Kubernetes. Each microservices has two CI pipeline:

- Base pipeline: Used to install all dependencies.

- Build pipeline: Used to build service on top of the base pipeline.

Now, the frequency to run base pipeline compared to build pipeline is rare as dependencies change very rarely. Both base pipeline and build pipeline produces docker images as the end result which is finally pushed to our container registry. If the pipeline fails then its respective docker image is not pushed.

Initially, I used to manually check them every time. It's not that daunting as it sounds as there were few checks in place,

Docker image in build pipeline is built on top docker image from base pipeline. So even if the latest base pipeline fails, the build pipeline will simply pull an earlier successfully pushed image.

In some rare scenario where if both the pipeline is triggered simultaneously then I have to manually pause the build pipeline till base pipeline is not succeeded. Now this is defeating the purpose of automation via CI pipeline 😭

As I mentioned earlier, the base pipeline can pull docker image from the repository no matter what is the current status of the base pipeline. This is done intentionally so that the CI pipeline will not break. But this also gave rise to a harmful design flaw. Ideally, the build pipeline must have all the latest packages and utilities installed. Smart people can definitely smell a trade-off here.

So let's summarize into a checklist that can solve all these problems, ideally, the build pipeline should check the following checklist before starting its execution:

- Is the base pipeline running?

- If running then wait and check after some interval using the pooling mechanism

- Resume as soon as base pipeline succeeds

- What is the status of the latest completed base pipeline?

- If it is succeeded then continue

- If it is failed then stop build pipeline

2. The Solution

After going through the problem now it's time to go through the solution.

2.1 Personal Access Token (PAT)

We'll need PAT to a establish connection with Azure DevOps and create its client. Follow this official guide from Microsoft to create one. Make sure the PAT must have Read access for Build and Release. You can enable it from Edit > Scopes.

Warning: ⚠⚠⚠

Make sure not to lose the PAT token key. As it is not stored anywhere in Azure DevOps and can be only copied once at the time of creation.

2.2 Creating Azure devops client

Azure DevOps provide extensive support through its REST api 🚀. We can directly use REST api over HTTPS operation, but we can't build custom logic around it. So instead I am using Azure DevOps Python API

Let's see how to create the client

from azure.devops.connection import Connection

from msrest.authentication import BasicAuthentication

# Setting up client to connect Azure devops

credentials = BasicAuthentication("", 'YOURPAT')

connection = Connection(base_url="<https://dev.azure.com/YOURORG>", creds=credentials)

core_client = connection.clients.get_core_client()

build_client = connection.clients.get_build_client()

2.3 Retrieve Builds

Here I will retrieve specific builds of in focus base pipeline with the help of filters. The best part of this REST API is that they are updated instantly 😍.

builds = build_client.get_builds(

project="<Your project name>",

repository_id=<Your repo id>,

repository_type="TfsGit", # For git based repo

branch_name="refs/heads/develop", # IF you want to retrieve build run for specif branch. In my case it is develop. ⚠ Do not remove 'refs/heads/'

definitions=[<Your pipelines definitionId>],

)

Tip: 🤝🤝🤝

I struggled a bit for searching 'definitionId'. But there's a neat trick, just go to your pipeline's build page (where it shows all history of runs) and in the URL you will find it.

2.4 Pooling Mechanism

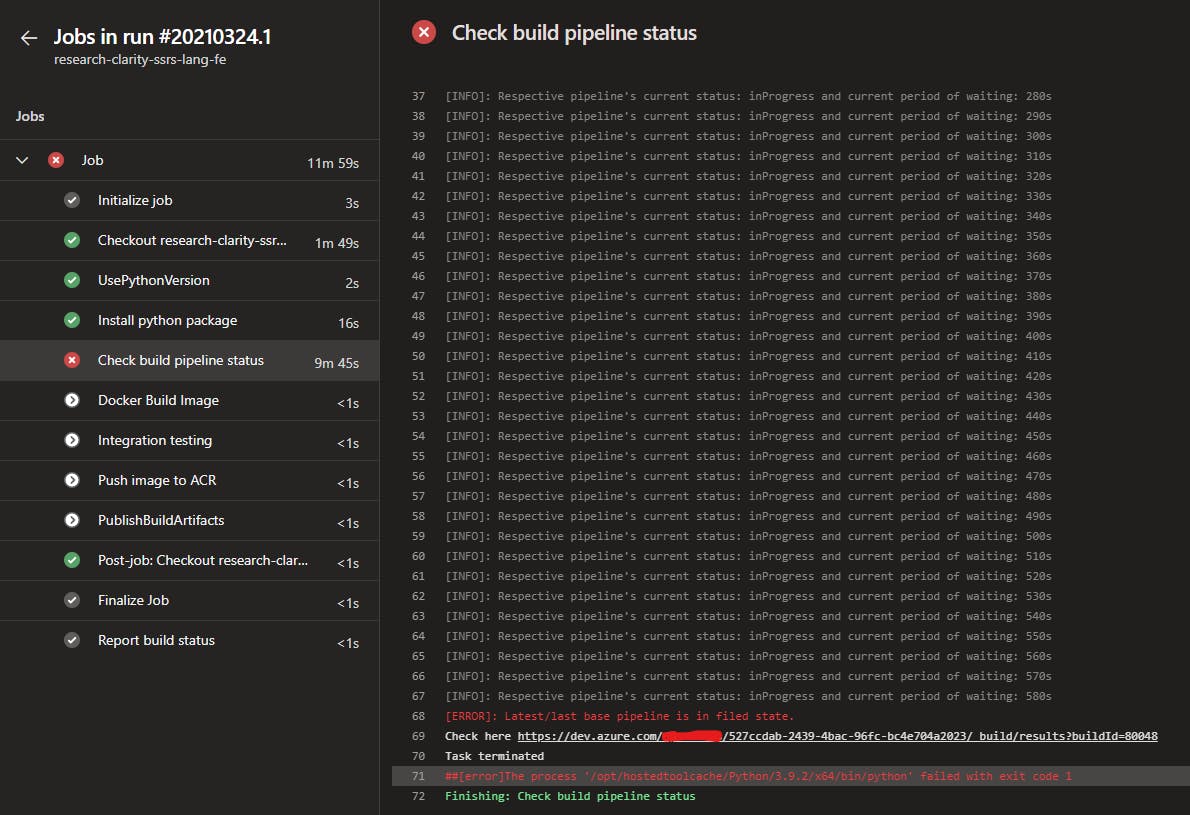

This is the most critical part as it will ensure to tick all element from the above checklist. The script first checks the result of the last build and if it's not in a failed state then continue to check the status for completion. This step is repeated every 10 seconds with a timeout of 30m

for step_time in list(range(10, 1810, 10)):

builds = build_client.get_builds(

project="<Your project name>",

repository_id=<Your repo id>,

repository_type="TfsGit",

branch_name="refs/heads/develop",

definitions=[<Your pipeline definitionId>],

)

# Time out with 30m

if step_time == 1800:

logger.error(

f"Time out as build pipeline is talking more than 30m.\nCheck the pipeline to investigate here {builds.value[0].url.replace('_apis/build/Builds/', '_build/results?buildId=')}"

)

os.sys.exit("Task terminated")

# Checking last result

if builds.value[0].result == "failed":

logger.error(

f"Latest/last base pipeline is in filed state.\nCheck here {builds.value[0].url.replace('_apis/build/Builds/', '_build/results?buildId=')}"

)

os.sys.exit("Task terminated")

# Checking for current status

if builds.value[0].status == "completed":

logger.info("Since base pipeline is ready moving ahead")

break

else:

logger.info(

f"Respective pipeline's current status: {builds.value[0].status} and current period of waiting: {step_time}s"

)

time.sleep(10)

I had even added a little extra logging information in case of failure to point out to failed run.

logger.error(

f"Latest/last base pipeline is in filed state.\nCheck here {builds.value[0].url.replace('_apis/build/Builds/', '_build/results?buildId=')}"

)

and

logger.info(

f"Respective pipeline's current status: {builds.value[0].status} and current period of waiting: {step_time}s"

)

3. Integration with Pipeline

This part can vary with your use case or preference. This is how I do it.

- I put the script at fix location in every repo

- I set the python version to be used as 3.x

- I used the pipeline variable to pass some parameters to the script. Like PAT which I store it as a secret and then pass it the script.

- Then finally I used the Run Python Script task of the Azure DevOps pipeline to run it.

- task: UsePythonVersion@0

inputs:

versionSpec: '3.x'

addToPath: true

architecture: 'x64'

- task: CmdLine@2

displayName: Install python package

inputs:

script: 'python3 -m pip install requests azure-devops'

- task: PythonScript@0

displayName: Check build pipeline status

inputs:

scriptSource: 'filePath'

scriptPath: '$(System.DefaultWorkingDirectory)/tests/base_pipeline_status.py'

arguments: '--personal_access_token $(PERSONALACCESSTOKEN) --repo_id e589ca4a-16ff-453b-b101-2a9f21542d76 --pipeline_def_id 183' # You can see I am passing PAT token to PERSONALACCESSTOKEN from pipeline variable

Tip 🤝🤝🤝

I always run this task at the beginning to avoid running other tasks unnecessarily if this fails eventually.

Here is a screenshot of the working of the logic