This tutorial will help you to build a ResNet model with any desired no of depth/layer from scratch

ResNet has been always one of my favourite architecture and I have used its core idea of skip connection many times. It is now fairly old as both ResNetV1(Deep Residual Learning for Image Recognition), ResNetV2( Identity Mappings in Deep Residual Networks) came in 2015 & 2016, but still today its core concept is widely used. So you must be thinking… why this tutorial in 2020.

There are two primary reasons for this tutorial:

Build ResNetV2 with any desired depth, not just ResNet50, ResNet101 or ResNet152 (as included in keras application)

Use of Tensorflow 2.xx

This tutorial is divided into two-part. Part 1 will briefly discuss ResNet and Part two will focus on the coding part. Keep one thing in mind the primary goal of this tutorial is to showcase the coding part of building the ResNet model with any desired no of depth/layer from scratch.

Part 1: ResNet in Brief

One of the biggest problems of any deep learning network is Vanishing, Exploding gradient. This restricts us to go much deeper into the network. Refer to video if you are not aware or want to refresh it.

So the question is How the ResNet solves the problem?

I will try to put it in simple words. The core idea of ResNet is based on skip connection i.e. it allows to take activation from one layer and feed it to future layer (can be much deep). Consider the problem of vanishing gradient, when we go deeper some neuron will not contribute anything because their weights have reduced significantly. But now if we bring activation from the earlier layer and add to the current layer before activation, now it will certainly contribute.

Refer this video to understand it more mathematically

Now without wasting time let us move towards coding part

Part 2: Coding

Note: I have published the repo containing all the code and can be found here.

- First, we have to create a residual block which is building block of ResNet. It is used as a skip connector. ResNetV2 brought one slight modification, as opposed to V1 convolution layer is used first and then batch normalization.

# Identity Block or Residual Block or simply Skip Connector

def residual_block(X, num_filters: int, stride: int = 1, kernel_size: int = 3,

activation: str = 'relu', bn: bool = True, conv_first: bool = True):

"""

Parameters

----------

X : Tensor layer

Input tensor from previous layer

num_filters : int

Conv2d number of filters

stride : int by default 1

Stride square dimension

kernel_size : int by default 3

COnv2D square kernel dimensions

activation: str by default 'relu'

Activation function to used

bn: bool by default True

To use BatchNormalization

conv_first : bool by default True

conv-bn-activation (True) or bn-activation-conv (False)

"""

conv_layer = Conv2D(num_filters,

kernel_size=kernel_size,

strides=stride,

padding='same',

kernel_regularizer=l2(1e-4))

# X = input

if conv_first:

X = conv_layer(X)

if bn:

X = BatchNormalization()(X)

if activation is not None:

X = Activation(activation)(X)

X = Dropout(0.2)(X)

else:

if bn:

X = BatchNormalization()(X)

if activation is not None:

X = Activation(activation)(X)

X = conv_layer(X)

return X

Inside network sometime convolution layer is not used first so we have to use an if-else loop.

- Next is add layers. We will use Keras functional API for the same purpose

# depth should be 9n+2 (eg 56 or 110)

# Model definition

num_filters_in = 32

num_res_block = int((depth - 2) / 9)

inputs = Input(shape=input_shape)

# ResNet V2 performs Conv2D on X before spiting into two path

X = residual_block(X=inputs, num_filters=num_filters_in, conv_first=True)

# Building stack of residual units

for stage in range(3):

for unit_res_block in range(num_res_block):

activation = 'relu'

bn = True

stride = 1

# First layer and first stage

if stage == 0:

num_filters_out = num_filters_in * 4

if unit_res_block == 0:

activation = None

bn = False

# First layer but not first stage

else:

num_filters_out = num_filters_in * 2

if unit_res_block == 0:

stride = 2

# bottleneck residual unit

y = residual_block(X,

num_filters=num_filters_in,

kernel_size=1,

stride=stride,

activation=activation,

bn=bn,

conv_first=False)

y = residual_block(y,

num_filters=num_filters_in,

conv_first=False)

y = residual_block(y,

num_filters=num_filters_out,

kernel_size=1,

conv_first=False)

if unit_res_block == 0:

# linear projection residual shortcut connection to match

# changed dims

X = residual_block(X=X,

num_filters=num_filters_out,

kernel_size=1,

stride=stride,

activation=None,

bn=False)

X = tf.keras.layers.add([X, y])

num_filters_in = num_filters_out

Let us go it line-by-line

→ On line #7 we take input

→line #10 is used to create a convolution layer before splitting into two paths. Here we are using the same residual block which we created earlier.

→from line #13–55 are used to create a stack of residual units. Let's discuss this in more details. After line #10 the network is split into two part & later are added. Now in part one three operations are performed,

batch norm → activation → Conv.

This process is repeated thrice and finally added. To put in ResNet perspective, after splitting, in one sub-network we perform some operation (as mentioned above) and add its activation to the state where we split. So you see we skipped or jumped three layers.

Why three layers? It depends on your intuition. It can be two or four or anything. I found the best result with three & also the author have suggested with two or three layers.

Time to go deeper into code…litrely… :)

→line #13 starts appending stacks of the residual block in for loop. (A simple trick here to skip over the desired layer is a start for loop in that range)

→line #30–44 is used to append the convolution layer in one of sub-network after performing the split.

Here you can see that I have used three residual blocks. Why three? Because I am skipping over three activations

→line #45–53: after performing the split, second sub-network must be a convolution layer which can be skipped ahead added later.

→line #54 is where all the magic takes place :). Here we add our both sub-network.

- Last part is to connect a fully connected layer on top of the network.

# Add classifier on top.

# v2 has BN-ReLU before Pooling

X = BatchNormalization()(X)

X = Activation('relu')(X)

X = AveragePooling2D(pool_size=8)(X)

y = Flatten()(X)

y = Dense(512, activation='relu')(y)

y = BatchNormalization()(y)

y = Dropout(0.5)(y)

outputs = Dense(num_classes,

activation='softmax')(y)

# Instantiate model.

model = Model(inputs=inputs, outputs=outputs)

You can customize this part at your will.

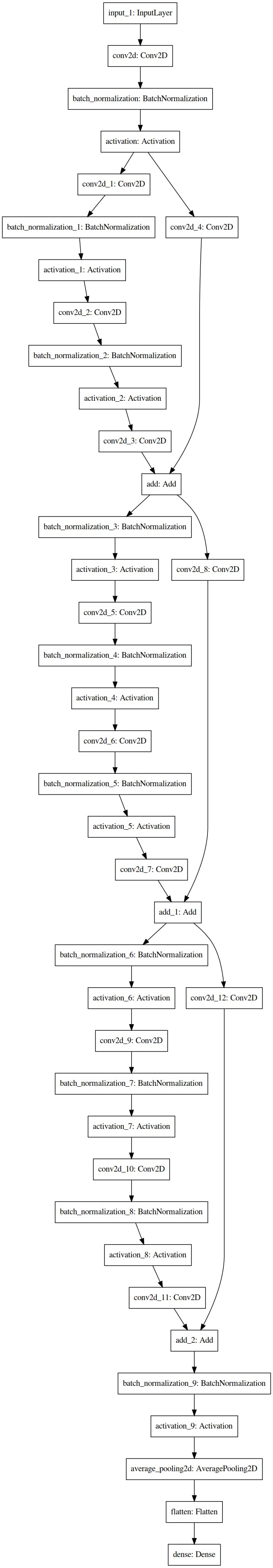

Sample Model architecture with depth 11

Sample Model architecture with depth 11

Some Key Things to Note

I have written a comment ‘depth should be 9n+2’ because here I am skipping over three activations and to match the tensor shape before & after the split, depth must be chosen with accordance to the formula.

Lots of adjustment are made in strides and kernel size to match the tensor shape. I would suggest you write down one loop on a paper to see all the adjustment and understand it more thoroughly.

Do not get confused by skip connectors or residual block. The connection between them is as follow: after performing the split two path are created. path one will have three layers or three residual blocks and path two will have one layer or one residual block (now this block is the same as before the split). Now this residual block from path two will be brought up to path one and added before activation. Thus this block had jumped or skipped over three blocks & thus became a skip connector.

Part 3: End Notes and some extras

I would suggest you go through my GitHub repo ‘ResNet-builder’ as it includes a lot of more APIs which I thought might my out of scope for this blog. This blog was restricted to building a ResNet network. But to build a complete ResNet system we will need much more functionality like a Data loader, Inference generator, Visualize model performance, etc. I have included all these APIs.

The repo also supports a variety of configurations to build a model.

I have tried to keep API as intuitive as possible, though if you have any confusion you can connect me via Linkedin.