Testing in a CI/CD Pipeline Part 2: Integration testing

Why, how, when, where to perform testing in a CI/CD pipeline.

This is part 2 of the Testing in a CI/CD Pipeline series. It is advised to first go through it 🤓.

1. Integration testing in brief 💼

Integration testing is different from system or unit testing. Let's see in brief (as the original intent of this guide is how to integrate it CI/CD pipeline)

- In unit testing, tests are performed to measure the correctness of individual smaller or unit component of the system.

- In contrast, Integration testing is a testing stage where two or more software units are joined and tested as one entity.

- Integration testing in CI/CD pipeline works best and integrates easily if your system is build using a Microservice approach.

2. General mechanics

Why

- To ensure every individual microservice is working/behaving correctly.

- Buggy code will be restricted during the CI pipeline only & will never get deployed.

How

- Running a practical (mocking a real-world use case) example against the microservice. The result must be known beforehand and will be used to compare the output from the test.

When

- Integration testing must be done during the CI pipeline.

- It should be triggered after the microservice is successfully build.

Where

- It is always performed at Remote Repository (eg Github, Gitlab).

- It should be the second step of any CI/CD pipeline.

3. Implementation in Azure DevOps pipeline 🚀

- If your microservice exposes an endpoint (which will be in most of the cases) then all you need is to post a request using REST API (or whatever your microservice supports).

- In my case, the complete microservice was packaged and containerized using Docker. I used a simple python script to extract URL, post request using REST API, and compare the output.

Let's go first through the testing script and then through the CI pipeline

3.1 Test script

- The core of the script is to read some sample data send a post request and finally compare result. The bare minimum would be

with open('./tests/sample_data.json', 'r') as f:

payload = json.load(f)

header = {"Content-Type": "application/json"}

response = requests.request('POST', url, headers=header, json=payload)

response_data = response.json()

assert response_data['ida_output_path'].split('/')[-1] =='IDA.ndjson', 'Not Received expected output, test is failed.' # You may use some other method to compare 😁

- This will only work in an ideal scenario which will be not possible 99% of the time & in fact completely defeats our original purpose.

- We need to make it more suitable & versatile for this use case. It can be done by adding two more components,

- A try-catch block.

- console logging at the time of failure to investigate it.

try:

response = requests.request('POST', url, headers=header, json=payload)

response_data = response.json()

except:

logging.error('An error has occurred. Refer logs to locate error.')

os.system("docker logs test_api > output.log")

time.sleep(3)

with open('./output.log', 'r') as log:

print(log.read())

try:

assert response_data['ida_output_path'].split('/')[-1] =='IDA.ndjson', 'Not Received expected output, test is failed.'

except (AssertionError,KeyError) as e:

os.system("docker logs test_api > output.log")

time.sleep(3)

with open('./output.log', 'r') as log:

print(log.read())

- Some pointers from the above code block

- I have added post request and result comparison in the

try-catchblock so that any error will not stop the code. - As I have mentioned earlier I am performing the test inside the docker image so all the logs I am extracting from it (as shown on line no 6, 15)

- Printing logs at the time of failure is very important for investigating the issue. The aim of Integration testing is not just to restrict a buggy release but also to help to investigate it.

- I have added post request and result comparison in the

3.2 Extracting URL/endpoint

- This may differ quite a lot based on your use case. This is how I do it.

- I first start/run the freshly built docker container on the worker node of CI pipeline

- Then extract the IP address where the container is running.

- Use this IP as the endpoint to post request over REST API.

subprocess.call(['docker', 'run', '-d', '-p' ,'80:80','--name', 'test_api', args.image_name])

ip = subprocess.getoutput("docker inspect --format='{{range .NetworkSettings.Networks}}{{.IPAddress}}{{end}}' test_api")

url = f'http://{ip}/api/IDA'

logger.info(f"Testing api @ {url}")

3.2 Integration with CI Job

- If you follow a similar flow then all you need is a task to run the python script. It can be as simple as,

- task: PythonScript@0

displayName: Integration testing

inputs:

scriptSource: 'filePath'

scriptPath: '$(System.DefaultWorkingDirectory)/tests/integration_testing.py'

arguments: '--image_name your.repo.io/ida:$(Build.BuildNumber)'

- There are two more important points for implementing Integration testing

- Placement: It should be performed just after Docker build and before Docker push

- If the test fails the script must stop the pipeline to proceed further. This can be achieved by using something like

os.sys.exit()

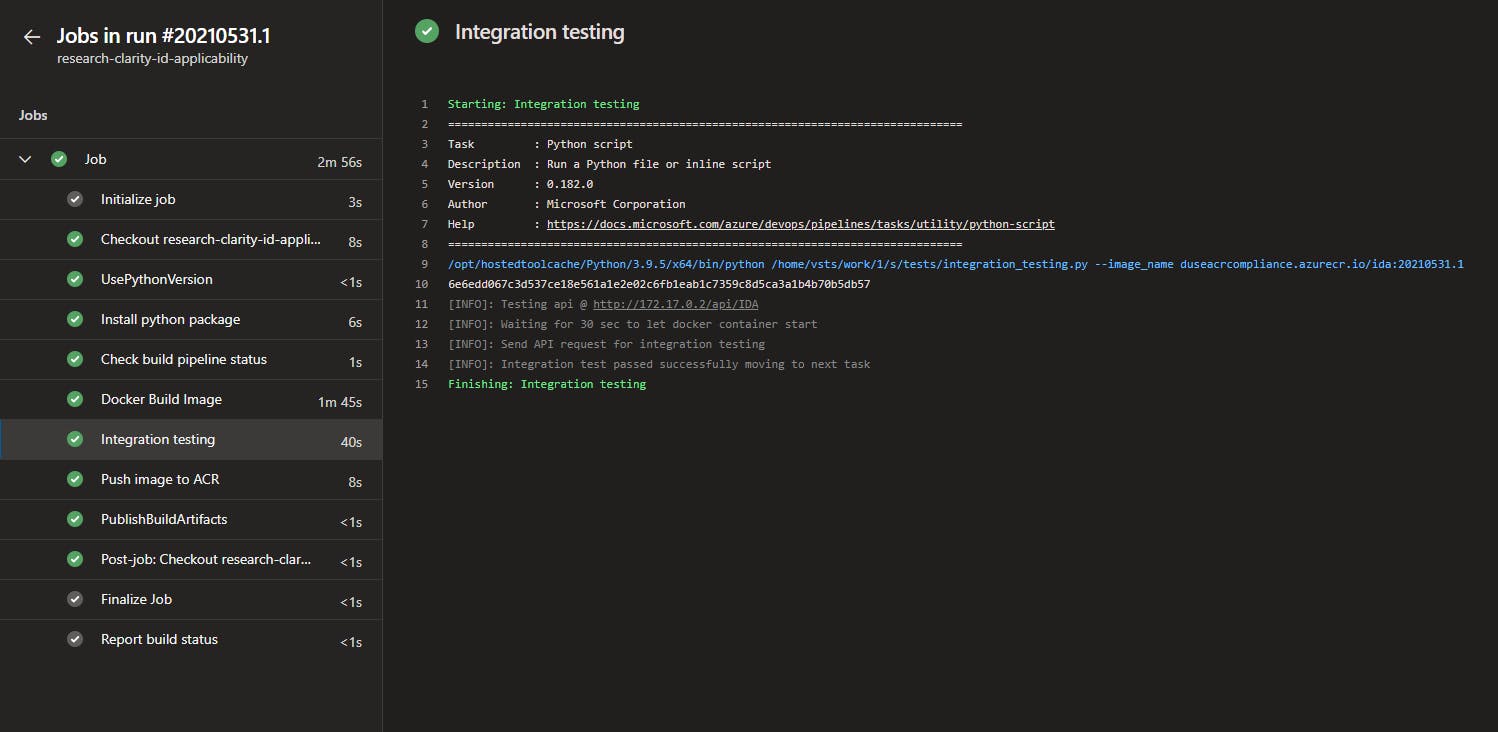

4. Working/Demo

Img: Case- Passing of Integration test

Img: Case- Passing of Integration test

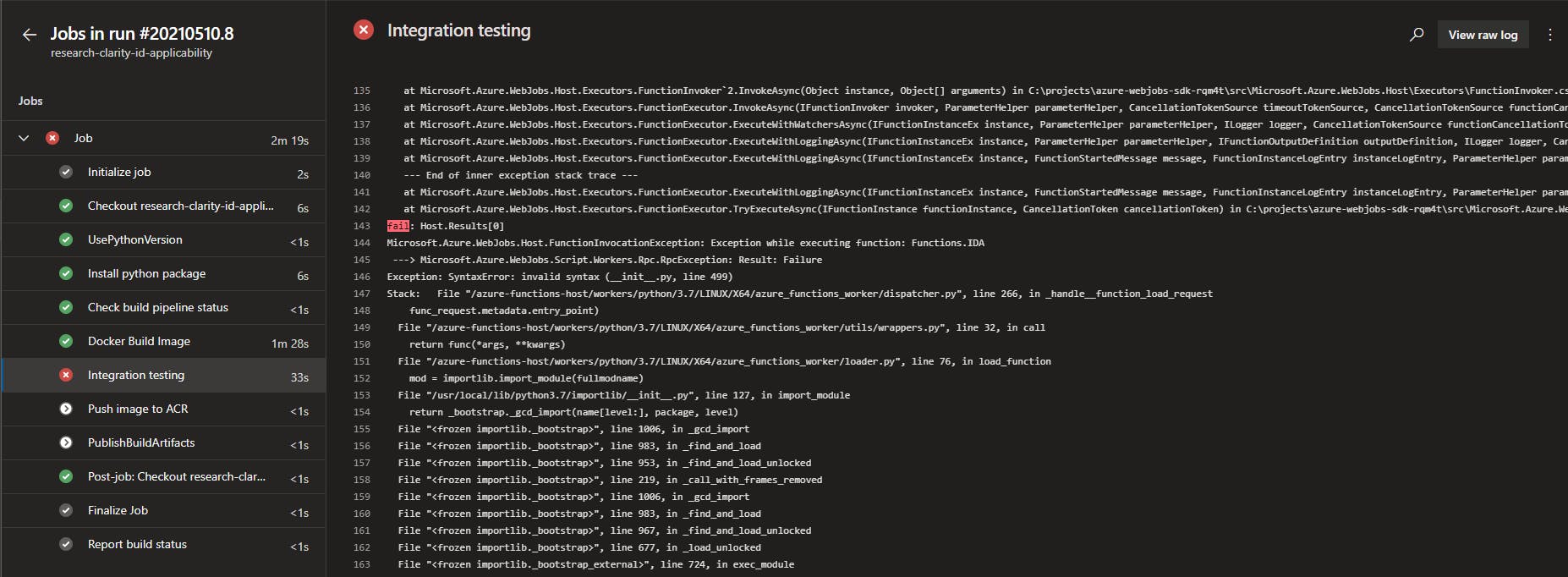

Img: Case- Failing of Integration test.

Img: Case- Failing of Integration test.

- As you can see no further task were executed after the failure of the integration test and eventually any subsequently connected CD tasks.

5. Complete code

- integration_testing.py

import os

import json

import time

import logging

import argparse

import requests

import subprocess

logging.basicConfig(level=logging.INFO, format='[%(levelname)s]: %(message)s')

logger = logging.getLogger(__name__)

parser = argparse.ArgumentParser()

parser.add_argument('--image_name', type=str)

args = parser.parse_args()

# Run docker container at port 80

subprocess.call(['docker', 'run', '-d', '-p' ,'80:80','--name', 'test_api', args.image_name])

ip = subprocess.getoutput("docker inspect --format='{{range .NetworkSettings.Networks}}{{.IPAddress}}{{end}}' test_api")

url = f'http://{ip}/api/IDA'

logger.info(f"Testing api @ {url}")

with open('./tests/sample_data.json', 'r') as f:

payload = json.load(f)

header = {"Content-Type": "application/json"}

logger.info('Waiting for 30 sec to let docker container start')

time.sleep(30)

logger.info("Send API request for integration testing")

try:

response = requests.request('POST', url, headers=header, json=payload)

response_data = response.json()

except:

logging.error('An error has occurred. Refer logs to locate error.')

os.system("docker logs test_api > output.log")

time.sleep(3)

with open('./output.log', 'r') as log:

print(log.read())

os.sys.exit('Task terminated')

try:

assert response_data['ida_output_path'].split('/')[-1] =='IDA.ndjson', 'Not Received expected output, test is failed.'

except (AssertionError,KeyError) as e:

os.system("docker logs test_api > output.log")

time.sleep(3)

with open('./output.log', 'r') as log:

print(log.read())

logging.error(f'Problem with {e} Refer logs to locate error')

logging.error(f"Response from API: {response_data}")

os.sys.exit('Task terminated')

logger.info("Integration test passed successfully moving to next task")

- CI pipeline

trigger:

branches:

include:

- develop

paths:

exclude:

- Dockerfile_base

- requirements.txt

pool:

vmImage: 'ubuntu-latest'

steps:

- checkout: self

clean: true

fetchDepth: 1

- task: UsePythonVersion@0

inputs:

versionSpec: '3.x'

addToPath: true

architecture: 'x64'

- task: CmdLine@2

displayName: Install python package

inputs:

script: 'python3 -m pip install requests azure-devops'

- task: PythonScript@0

displayName: Check build pipeline status

inputs:

scriptSource: 'filePath'

scriptPath: '$(System.DefaultWorkingDirectory)/tests/base_pipeline_status.py'

arguments: '--personal_access_token $(PERSONALACCESSTOKEN) --repo_id b978e55f-bf80-466c-86c8-fc0dfe909b2c --pipeline_def_id 179'

- task: Docker@0

displayName: 'Docker Build Image'

inputs:

azureSubscription: 'Your Subscripton'

azureContainerRegistry: 'Your container registery'

dockerFile: Dockerfile

buildArguments: |

ARG_STORAGEACCOUNTNAME=$(STORAGEACCOUNTNAME)

ARG_CONTAINERNAME=$(CONTAINERNAME)

ARG_STORAGEACCOUNTKEY=$(STORAGEACCOUNTKEY)

ARG_MAXWORKERS=$(MAXWORKERS)

imageName: 'ida:$(Build.BuildNumber)'

- task: PythonScript@0

displayName: Integration testing

inputs:

scriptSource: 'filePath'

scriptPath: '$(System.DefaultWorkingDirectory)/tests/integration_testing.py'

arguments: '--image_name your.repo.io/ida:$(Build.BuildNumber)'

- task: Docker@0

displayName: 'Push image to ACR'

inputs:

azureSubscription: 'Your Subscripton'

azureContainerRegistry: 'Your container registery'

action: 'Push an image'

imageName: 'ida:$(Build.BuildNumber)'

- task: PublishBuildArtifacts@1

inputs:

PathtoPublish: '$(Build.SourcesDirectory)/kube'

ArtifactName: 'drop'

publishLocation: 'Container'