Testing in a CI/CD Pipeline Part 3: Deployment testing

Why, how, when, where to perform testing in a CI/CD pipeline.

This is part 3 of the Testing in a CI/CD Pipeline series. It is advised first to go through part 1, part 2 🤓.

1. Deployment testing in brief 💼

Deployment testing is different from system or unit testing. Let's see in brief (as the original intent of this guide is how to integrate it CI/CD pipeline)

- In unit testing, tests are performed to measure the correctness of the system's individual smaller or unit component.

- In contrast, Deployment testing is a testing stage where two or more software units are joined and tested as one entity but after release or deployment.

- Deployment testing in CI/CD pipeline works best and integrates easily if your system is build using a Microservice approach.

2. General mechanics 🧰

Why

- To ensure every individual microservice is working/behaving correctly.

- Buggy code can be caught after release (& ideally before exposing to the public) and trigger an automated (or manual depends on your use case) rollback.

How

- Running a practical (mocking a real-world use case) example against the microservice. The result must be known beforehand and will be used to compare the output from the test.

When

- Deployment testing must be done as the last component of the CD pipeline.

- It should be triggered after the microservice is successfully deployed.

Where

- It is always performed at your infra/deployment layer (eg. Kubernetes)

- It should be the last step of any CI/CD pipeline.

3. Implementation in Azure DevOps pipeline 🚀

- If your microservice exposes an endpoint (which will be in most of the cases) then all you need is to post a request using REST API (or whatever your microservice supports).

- In my case, the complete microservice was packaged and containerized using Docker and deployed to the Kubernetes cluster. I used a simple python script to extract URL, post request using REST API, and compare the output.

- If you have gone through part 2 of this series regarding integration testing, you will notice that a lot of things are common for both. That's true, the core of both these testing is exactly the same, just executing them differs.

Note: I believe the execution part is more important than the logic (as core logic is the same as integration testing). So I will be focussing more on the execution part.

3.1 Test script

- As I mentioned earlier the core of integration testing and deployment testing is the same so even the testing logic is the same.

- The key difference here is, the deployment testing script should be able to perform the test of all the microservices. To put together in context with integration test, deployment test is a compilation of all individual integration test w.r.t to their microservices.

- As the concept of the test script is already explained in detail here, so I am skipping it, though I will show the overall code at the end just to make this blog less cluttered.

3.2 Perform deployment test in the Kubernetes

You may not be using the Kubernetes at all but the idea behind this is platform agnostic and understanding the flow is key, having said that let's move on

- As all the microservices are Deployment app (in kubernetes world, more here) we will treat the deployment test as a microservice too. Doing so have tons of benefits like:

- All the networking requirement will be handled by kubernetes. If you maintained multiple staging envs (like dev, qa, prod), we use the kubernetes namespace to perform env specific test.

- As both client & user (here its microservice & deployment test service) are internal or at the same level in kubernetes, test latency will be much small.

- The deployment test pod which will be generated from its k8s deployment app will only include the test script.

- The CD pipeline of every microservice will need a

kubectl exectask at the end because all we need to do is run the python script sitting inside the deployment pod.kubectl -n <your namespace> exec po/<deployment test pod name> -- python3 deployment_test.pyNote: In the later section will see how all this can be automated using reference/variables.

3.3 Extracting URL/endpoint

Here comes the magic of kubernets as all the networking is handled by itself. There are a lot of options, but we will be using DNS for Services and Pods as both the service as internal.

All you need to know:

- Name of your k8s deployment app.

- Namespace where the pod is currently sitting.

The IP address for URL will be

http://<k8s deployment name>.<namespace>.svc.cluster.local/<your remaining endpoint or sub-page>. Let's see an example. Let's say if k8s deployment name is my-deployment & namespace is dev then it will behttp://my-deployment.dev.svc.cluster.local/<some-api>Directly using IP address is bad practice as it will keep on changing after every new/restart pod. But the above method, kubernetes will handle this for us.

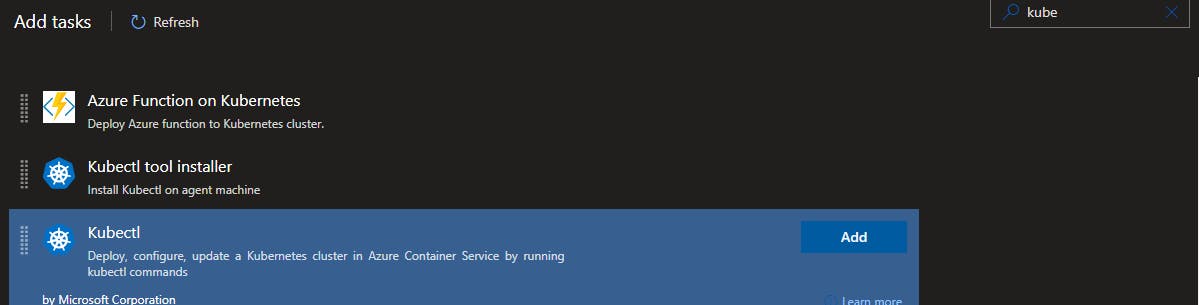

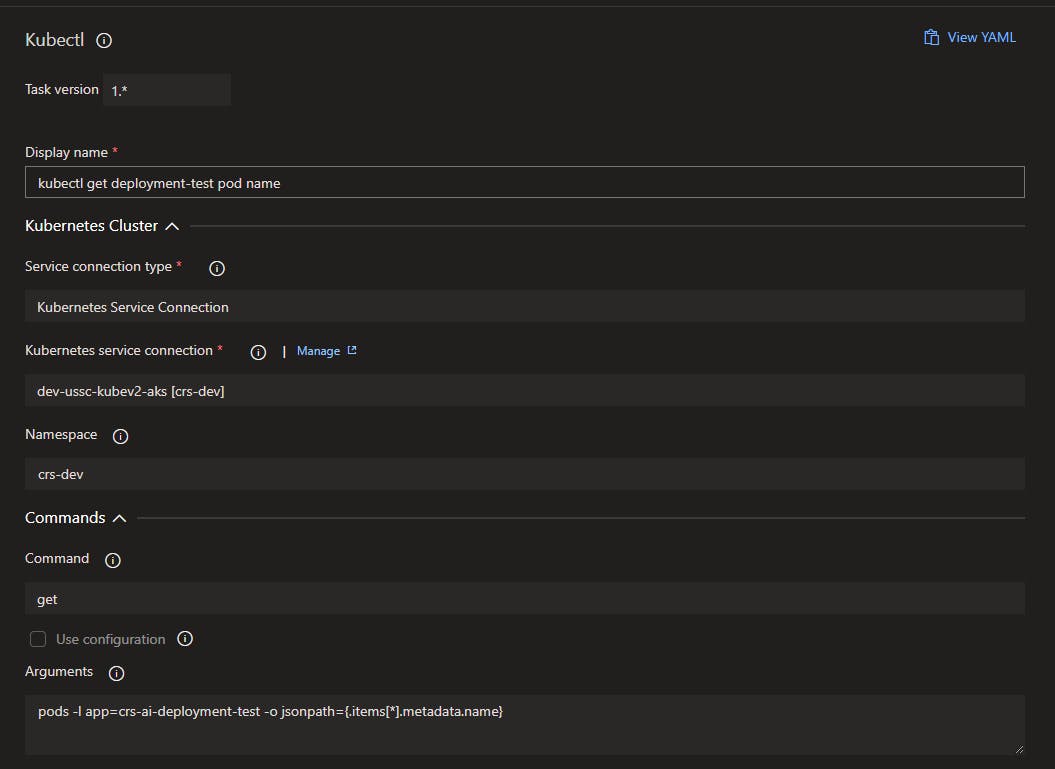

3.4 Integration with CD Job

I will be using the Release pipeline of the Azure DevOps pipeline for CD Job and focus just on the deployment testing task.

- All you need are multiple

kubectltask

- Step 1: We need to extract the deployment test pod name to perform the

kubectl execcommand.- We will use the

kubectl getcommand to extract the current pod name of the given deployment/app. See the Arguments section carefully. This is where we are extracting the name. The argument will be,pods -l app=crs-ai-deployment-test -o jsonpath={.items[*].metadata.name}

- We will use the

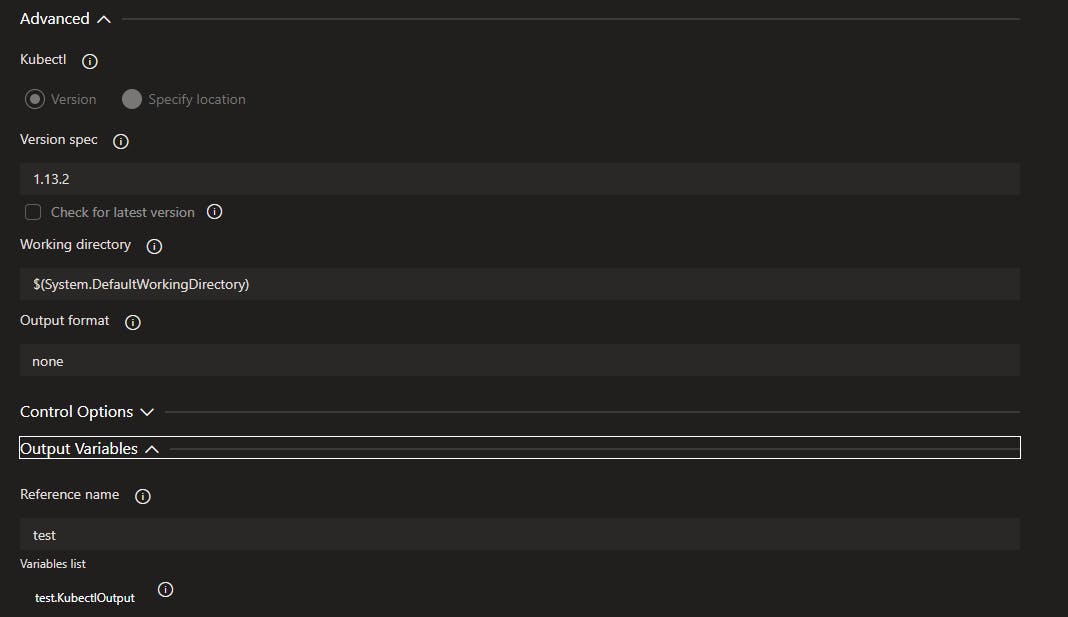

- Save the output/name in some reference/variable which can be used in a later stage. This can be done using Output variable > Reference name

- Output format should be always none. This can be done using Advanced > Output format> none

As you can see from the above screenshot, I am using test as a reference which makes the variable name as a test.KubectlOutput.

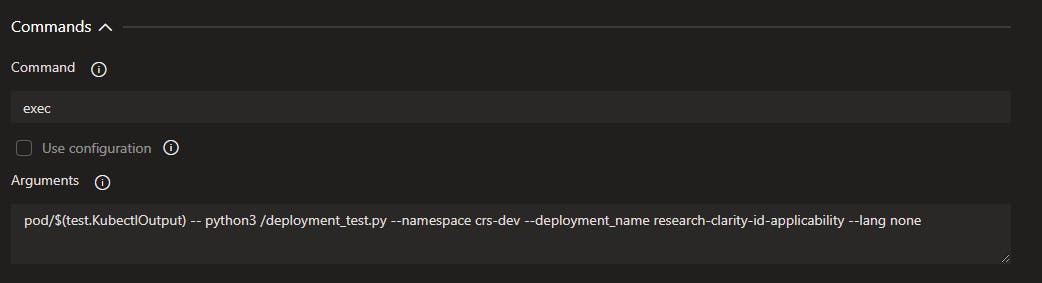

- Step 2: kubectl exec deployment testing

- We will use the

kubectl execcommand to run the python script. See the argument section carefully. The test.KubectlOutput which was produced in the previous stage is used now.

- We will use the

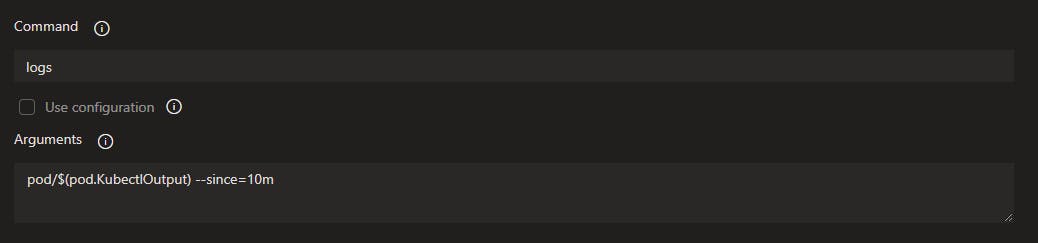

- Step 3: (extra) print logs if test fail

- Similar to integration testing where logs were printed to the console after the test failed for investigation needs can be done even in deployment testing.

- We will need a

kubectl logcommand with--since=10mflag

- See the argument section carefully. Here the reference

$(pod.KubectlOutput)is the name of the microservice. Its current pod name can be extracted similarly to how we did for the deployment test pod. - We also need to change the Control option to

Only when a previous task failedas this should be only run when the previous task of `kubectl exec' task performing deployment test failed.

So this is how deployment testing can be automated and integrated into the CD job. Let's see some action.

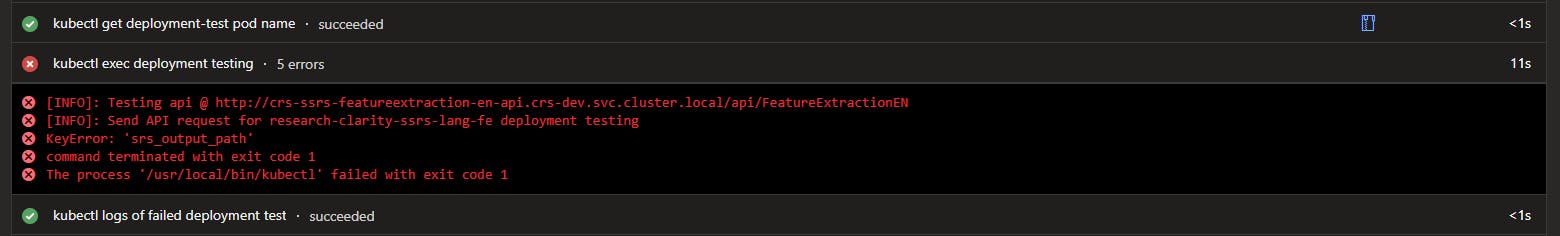

4. Demo

- First, the pod name of the deployment test pod was extracted. Then the testing was performed. As the test failed, next the logs were printed.

5. Deployment test code

This may change based on your requirement. But you can still refer to this for the idea, as always an idea is platform agnostic.

import sys

import json

import logging

import argparse

import requests

from typing import Dict, Tuple

sys.tracebacklimit = 0

logging.basicConfig(level=logging.INFO, format="[%(levelname)s]: %(message)s")

logger = logging.getLogger(__name__)

parser = argparse.ArgumentParser()

parser.add_argument("--namespace", type=str) # Targeted namespace

parser.add_argument("--deployment_name", type=str) # Targeted test as this is compilation of all individual test

def payload_data(deployment_name: str) -> Tuple[str, Dict]:

"""Prepare payload data for Deployment specifics

Parameters

----------

deployment_name : str

Name of deployment to perform testing.

Returns

-------

str

Api name

Dict

Sample data to check for deployment testing

Raises

------

ValueError

Must be from supported deployment testing: <here you can add all your test name><eg> research-clarity-id-applicability,research-clarity-id-adv-nonadv

"""

# TODO: Add new deployment name to the list

supported_deployment = [

"research-clarity-id-applicability",

"research-clarity-id-adv-nonadv",

]

if deployment_name not in supported_deployment:

raise ValueError(

f"Given deployment is either wrong or not supported.\nIt must be from {', '.join(supported_deployment)} "

)

# TODO: Add all new sample data and API here

elif deployment_name == "research-clarity-id-applicability":

api_name = "IDA"

svc = f"crs-id-applicability-api.{args.namespace}.svc.cluster.local"

path = "./data/sample_data_research-clarity-id-applicability.json"

elif deployment_name == "research-clarity-id-adv-nonadv":

api_name = "adverse_nonadverse"

svc = f"crs-id-adverse.{args.namespace}.svc.cluster.local"

path = "./data/sample_data_research-clarity-id-adv-nonadv.json"

with open(path, "r") as file:

payload = json.load(file)

return (api_name, svc, payload)

api_name, svc, payload = payload_data(args.deployment_name)

url = f"http://{svc}/api/{api_name}"

logger.info(f"Testing api @ {url}")

header = {"Content-Type": "application/json"}

logger.info(f"Send API request for {args.deployment_name} deployment testing")

response = requests.request("POST", url, headers=header, json=payload)

# TODO: Add all new assert condition here.

if args.deployment_name == "research-clarity-id-applicability":

response_data = response.json()

assert (

response_data["ida_output_path"].split("/")[-1] == "IDA.ndjson"

), "Not Received expected output, test is failed"

elif args.deployment_name == "research-clarity-id-adv-nonadv":

response_data = str(response.content).replace("'", "")

assert (

response_data.split("/")[-1] == "classifiation_output.ndjson"

), "Not Received expected output, test is failed"

logger.info("Deployment test passed successfully !!!")